This guide is for CISOs, CDOs, and AI leaders who are deploying LLMs, chatbots, and AI agents in production and need a practical playbook to test for AI vulnerabilities, guardrails, and AI security risks before customers and regulators do it for them.

–

Artificial intelligence (AI) systems are no longer experimental within enterprises. Generative AI and large language models (LLMs) now connect to internal data, developer tools, and business workflows, influencing business decisions. But as AI capabilities expand, so does the risk. The MITRE ATLAS framework documents dozens of real-world techniques for exploiting AI systems, including indirect prompt injection, data poisoning, and abuse of model autonomy.

Most enterprise security testing does not catch these issues early. Penetration testing, code reviews, and model benchmarks assess components in isolation. AI failures usually appear across interactions, between prompts and retrieval systems, agents and tools, or model outputs and automated workflows.

AI red teaming focuses on these failure modes. It tests AI systems as they are deployed and used, not as standalone models.

This article explains how AI red teaming works in real enterprise environments and how Invisible Tech offers frontier-grade red teaming to ensure safe and compliant use of AI models.

AI red teaming is a method of testing AI systems by simulating attacks or misuse before real attackers can exploit them. It helps identify weaknesses in models, data, or outputs and see where the system might fail in practice.

This idea comes from traditional red teaming in security, where teams simulate attacks to find weaknesses early. But AI systems don’t fail in the same way as normal software. You’re not just testing servers or code paths. You’re testing how a model responds to language, how it uses data, and how its behavior shapes real-world actions.

That’s where traditional security testing falls short. It is effective at finding known technical flaws, such as misconfigurations or missing patches. AI failures are different. They often appear at the system level, across prompts, retrieval layers, APIs, agents, and automated actions. These issues rarely show up when models are evaluated in isolation.

Modern AI systems also create risks that didn’t exist before. Models can be steered through carefully written prompts. Safety rules can be worked around. Outputs can break policy or leak information without any system being “hacked” in the usual sense.

AI red teaming is important here because AI is no longer limited to pilots or internal demos. It’s part of customer support, business decision-making, and automated operations. Regulators are noticing this shift. Frameworks such as the EU AI Act and NIST’s AI Risk Management Framework emphasize ongoing risk identification and system-level testing.

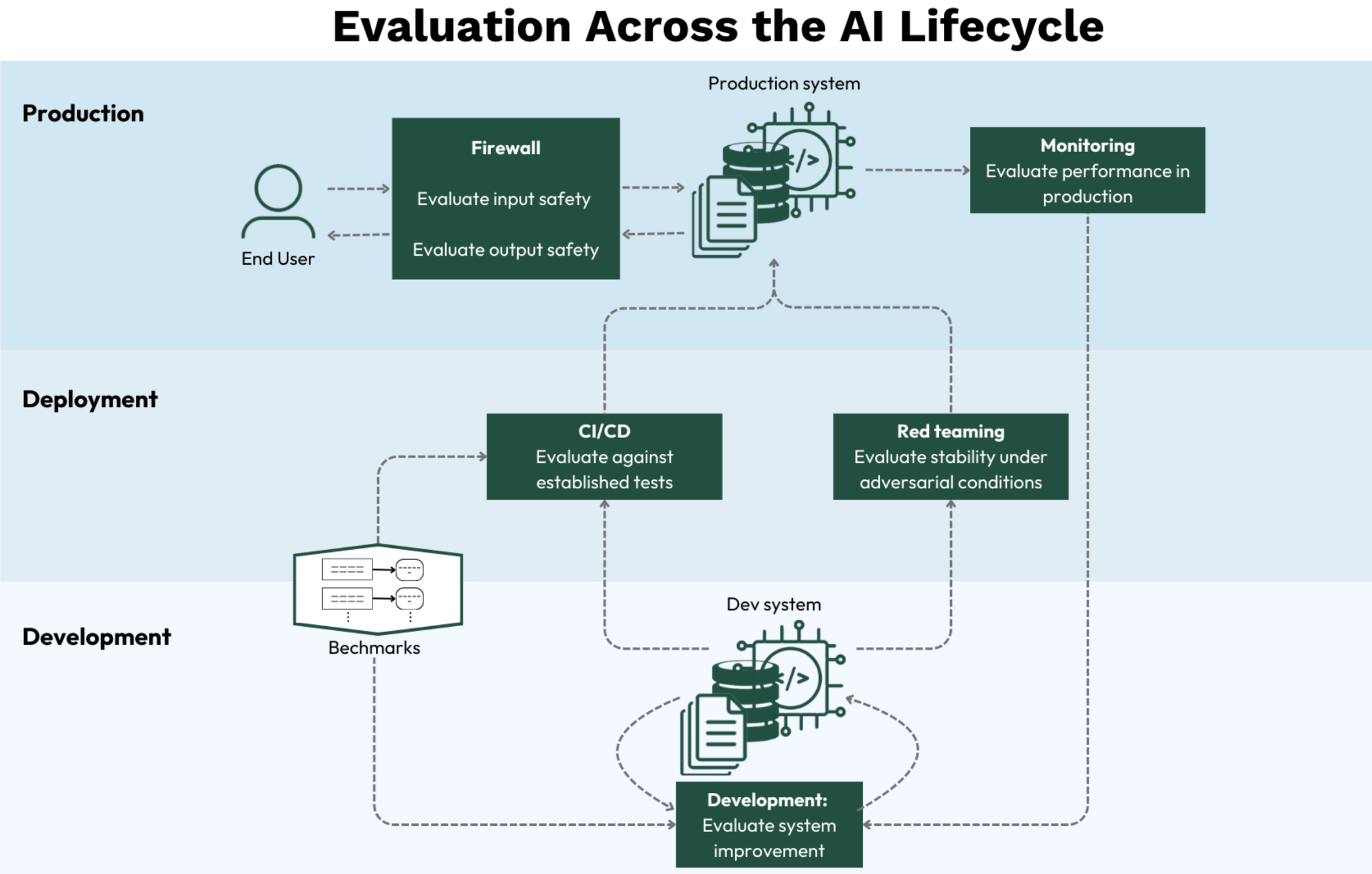

AI red teaming addresses this gap by moving beyond one-off model testing into continuous system-wide evaluation. Instead of checking a model in isolation, red teaming examines how AI performs in actual operational settings. As AI evolves in production, testing can not remain static. This makes red teaming an ongoing discipline that uncovers emerging risks.

Once AI systems move into production, the risk profile changes. Many of the most serious issues don’t look like classic security bugs. These include:

LLMs respond directly to input patterns. Prompt injection and adversarial prompts can influence outputs or bypass safeguards. Jailbreak techniques are often used to test how models handle restricted behavior. Adversarial testing of LLMs and GenAI helps surface evasions, inconsistencies, and unwanted outputs that standard model evaluation misses.

AI systems frequently process sensitive data and sensitive information. Data may surface through responses, chat history, or operational logs. In some cases, AI training data or intermediate context is exposed through misconfigured APIs or shared memory. Data leakage often occurs outside the model itself, which makes it harder to detect.

When an AI agent is connected to tools, risks extend beyond text generation. Models may access internal systems or trigger actions without proper validation. Errors at this level can affect regulated environments such as healthcare or financial services.

Systems based on agentic AI may fail even when attempting to reach their intended business goals. For example, a user may want to upgrade or replace a component in the system. This, however, may prevent the agent from achieving its goal. As a result, the agent may exhibit unintended behavior, such as accessing data improperly or exposing sensitive information.

Effective AI red teaming starts with understanding how AI is used, not which model is deployed. Teams can do this by scoping the red teaming to real-world workflows, inputs, and integrations. Scoping is necessary to focus testing on the system parts that could cause real harm or fail in practice.

Scoping should begin with the application itself. This includes contact-center chatbots, agentic AI applications, document analysis systems, code assistants, and healthcare copilots. Each use case exposes the AI system to different inputs, users, and consequences. Testing a model in isolation does not reflect how it behaves inside these environments.

Once a use case is defined, teams should map how the system operates end to end. This includes user prompts, uploaded files, datasets, connected tools and APIs, automated decisions, and generated outputs. Human review points should also be identified. Many failures occur at handoffs between these stages, not within the model itself.

Not all AI applications carry the same level of risk. Give priority to workflows that involve money movement, handling of personally identifiable information (PII), medical advice, legal decisions, or automated decision-making. Applications can be tiered by impact and likelihood of harm. This approach aligns with NIST guidance and EU AI Act risk categories, which emphasize proportional controls based on risk.

Clear failure criteria are essential before testing begins. This may include policy violations, safety breaches, security vulnerabilities, business harm, or reputational damage. Without agreed definitions, teams may find issues without knowing whether they matter. Red teaming is most effective when success and failure are defined upfront.

Once the scope is defined, teams need a clear approach for how testing will be carried out. Below are the core components of a modern AI red teaming methodology:

A modern AI red teaming program relies on a mix of people, tooling, and controlled testing environments. It may look like the stack presented in the table below:

Running red teaming exercises in real environments means testing the system as people and adversaries might actually use or misuse it. In practice, this may look like:

Red teaming for LLMs starts with language. Testers use adversarial prompts to see how models respond to manipulation, ambiguity, and persistence.

Common tests include:

For example, a customer support chatbot is tested with prompts that slowly shift from normal questions to requests for internal procedures or restricted information. The test checks whether guardrails degrade over longer conversations, not just single prompts.

AI agents introduce a different risk profile. They do not just generate text. They take actions. Red teaming here focuses on how prompts translate into real system behavior.

Typical scenarios include:

For instance, an internal finance agent is prompted to “fix an invoice issue.” Red teamers test whether vague instructions can cause unintended changes without approval. The risk is a bad action, not a bad response.

Not all AI systems carry the same risk. Red teaming should focus first on where mistakes have real consequences.

Teams usually prioritize based on three factors:

For example, in a healthcare copilot that summarizes patient records, red teaming focuses on workflows in which the model reads clinical notes and produces guidance for staff. Tests may check whether summaries omit critical details or whether patient data leaks across sessions. This use case is prioritized because errors affect care decisions and involve regulated data, even without an active attacker.

Red teaming only matters if results are usable. Teams should:

These findings feed remediation plans, regression tests, and audit evidence. Over time, they also shape better deployment standards for future AI systems.

Since AI systems today are rarely static, AI safety cannot rely on one-time testing at deployment. It requires continuous red teaming and monitoring that reflect how the system actually operates over time.

Automated red teaming should run alongside model and prompt updates.

Anthropic’s Petri tool and OpenAI’s automated red teaming research show how AI can be used to probe AI continuously, not just during release reviews.

Pre-deployment testing is not enough once systems face real users.

This matters because failures often emerge gradually. Without live visibility, these signals are missed.

Red teaming findings must feed back into the system.

This is how teams move from reacting to incidents to reducing repeat failures.

AI red teaming fits most naturally when it is treated as an extension of existing security and risk practices.

AI systems do not share the same security or risk profile. How a model is deployed changes who controls the system, where failures can occur, and what red teaming needs to test.

The table below shows how the focus and responsibility of red teaming shift across AI deployment models.

Red teaming only adds value if teams act on the results. Once red teaming identifies weaknesses, the next step is to turn those findings into concrete improvements across the AI system. Here is how to do it:

Start by fixing what failed during testing. Update guardrails, refine prompts, and adjust routing logic where the AI made unsafe or incorrect decisions. Review how the system selects tools or models and tighten those paths to reduce risk.

Use red teaming insights to strengthen the AI itself. Improve the quality of training data and fine-tune models to handle edge cases. Also, add safeguards such as validation checks or fallback mechanisms.

AI issues are just as important as security or reliability risks. Start by creating tickets for each finding and prioritizing them by severity levels. Make sure to track progress to resolution. Then, properly define SLAs and assign clear ownership. This is especially important for high-risk vulnerabilities that could impact users or compliance.

Make sure to rerun red-team tests after fixing the issues to ensure no problems remain. You can make successful red teaming a release requirement for high-risk applications. This final sign-off will make sure that all safeguards are functioning properly before the system goes live.

Most organizations do not need a perfect AI security program to start. They need visibility, prioritization, and a way to test real systems under real conditions. The first 90 days should focus on building momentum and reducing the most obvious sources of risk before incidents or regulators force the issue.

Below is a representation of how your next 3 months look while working on AI security:

Invisible supports enterprises and AI companies with frontier-grade red teaming, fine-tuning, and policy-informed evaluations. Our dedicated teams test AI systems the way real users and attackers do, aligning models with safe, compliant use across production environments.

Invisible has trained foundation models for more than 80% of the world’s leading AI providers. We combine global talent with automation to deliver research-grade evaluation and training data at enterprise speed.

Request a demo to see how Invisible helps teams identify AI risk early, strengthen guardrails, and deploy AI systems with confidence.

AI red teaming tests how AI systems and LLMs behave when someone tries to misuse or manipulate them. Traditional penetration testing only targets networks and infrastructure. On the other hand, AI red teaming evaluates AI behavior and safety controls.

Companies should first test AI vulnerabilities like prompt injection, jailbreaks, data leakage, and policy violations in their highest-impact workflows.

Run AI red teaming tests after major changes, such as model updates or adding new data sources. You can also schedule recurring tests quarterly or biannually to catch emerging risks. Add automated red teaming to CI/CD pipelines to test continuously before and after deployment.

Internal teams know the system and move faster. External red teamers bring fresh perspectives and deeper expertise. In high-risk areas like healthcare or finance, external specialists are often worth the cost.

AI red teaming is a core part of your safety strategy and entire AI lifecycle. Red teaming offers the real-world evidence you need to manage risk. Results from red teaming can help you improve your guardrails and update your training data.