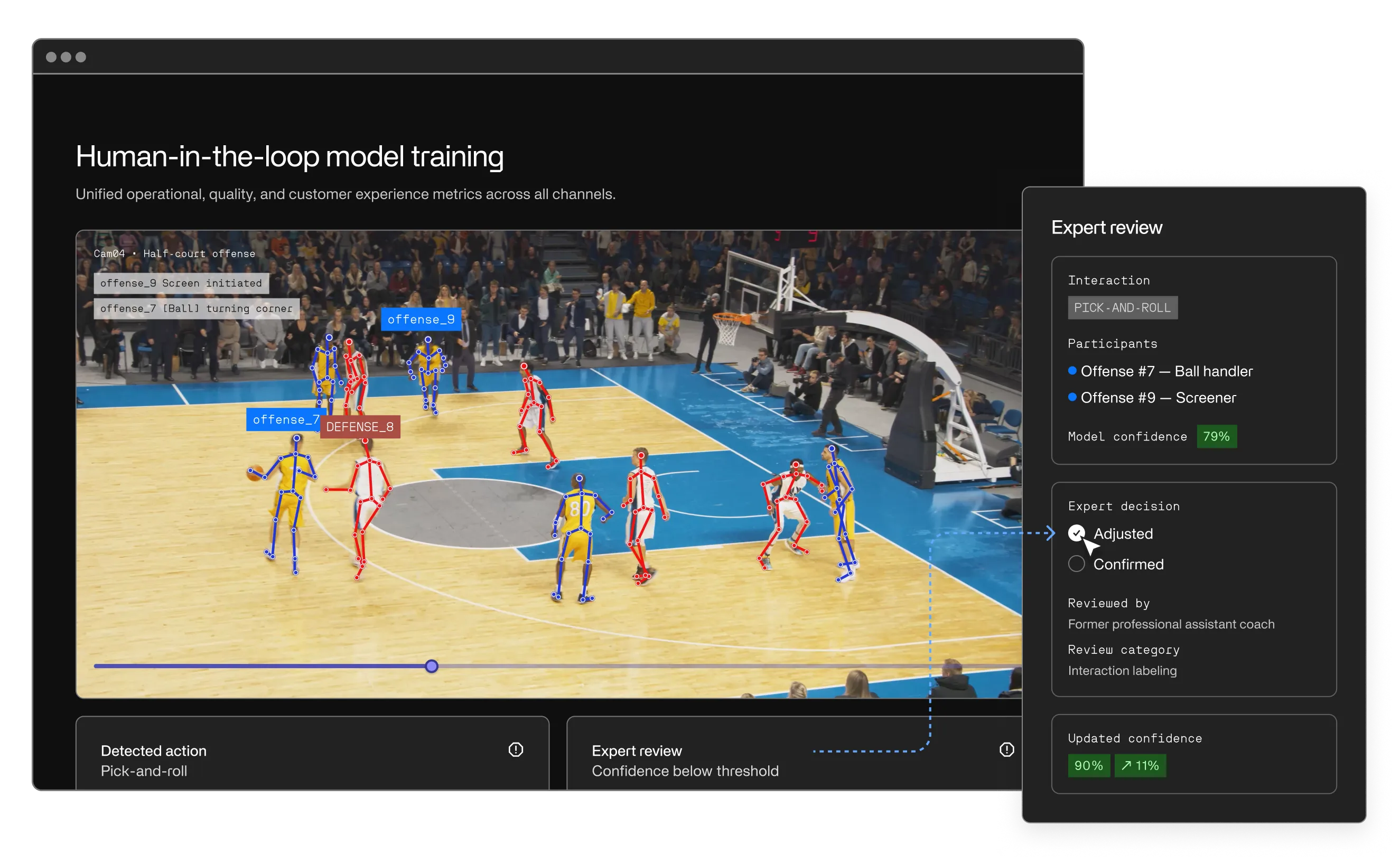

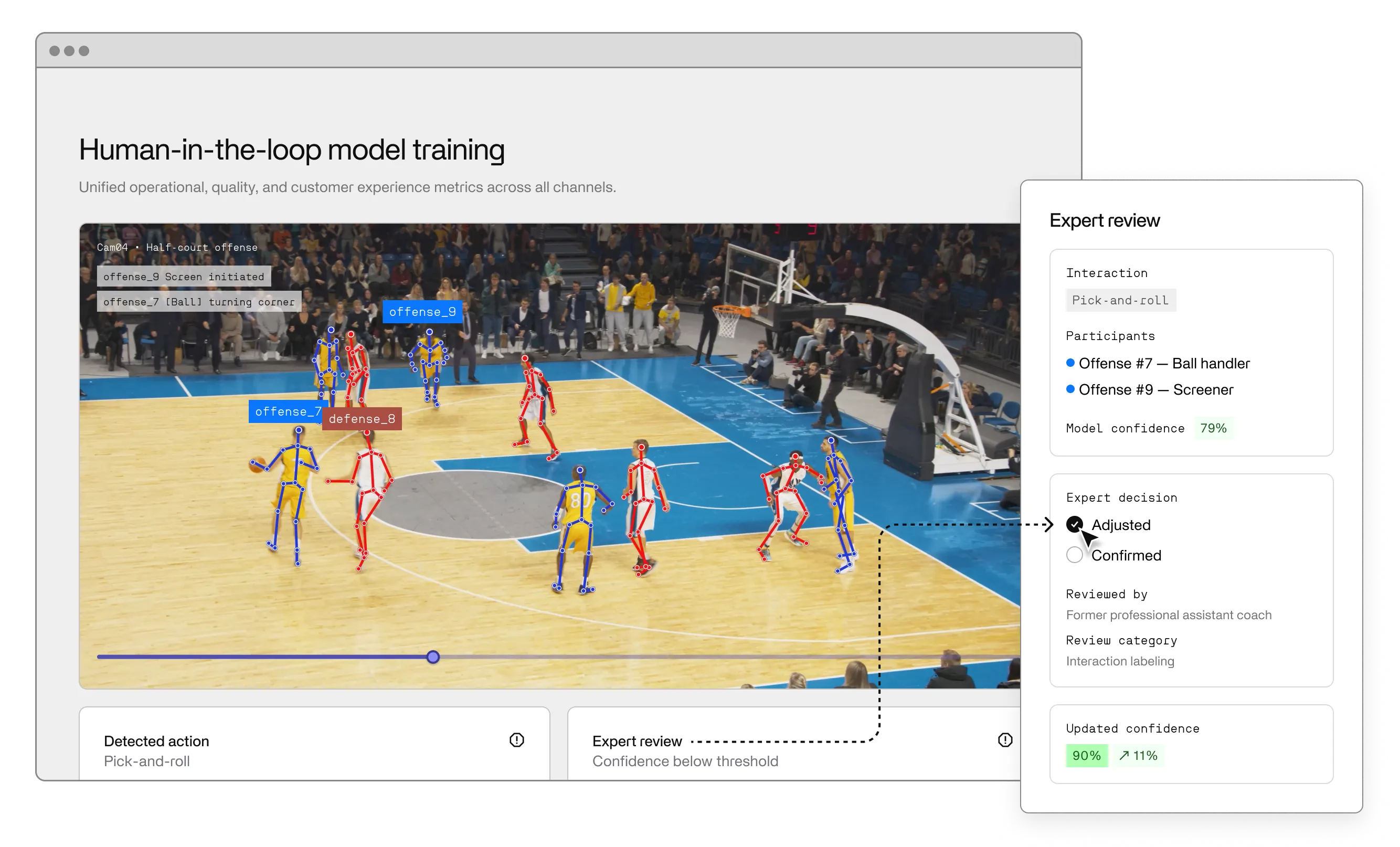

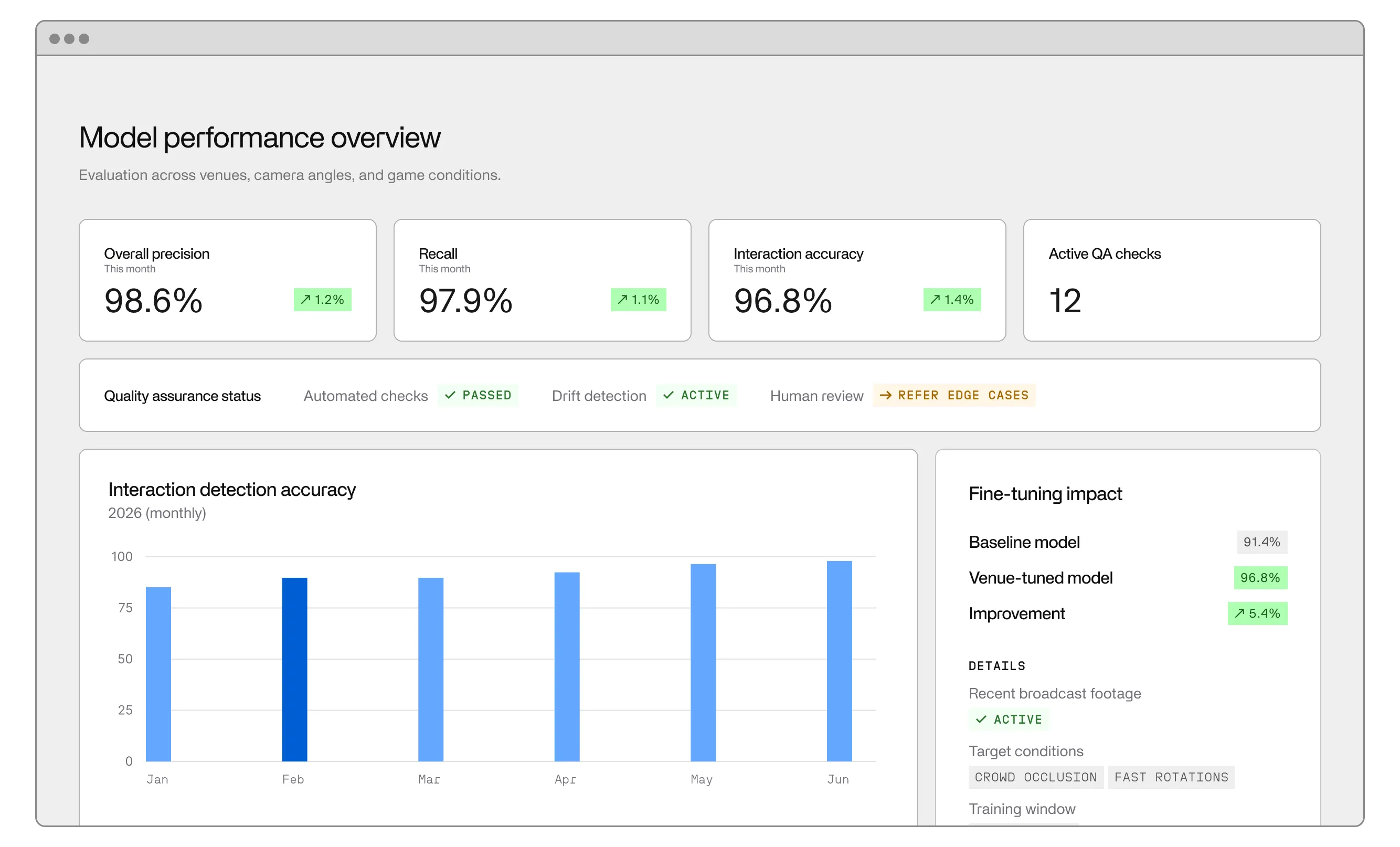

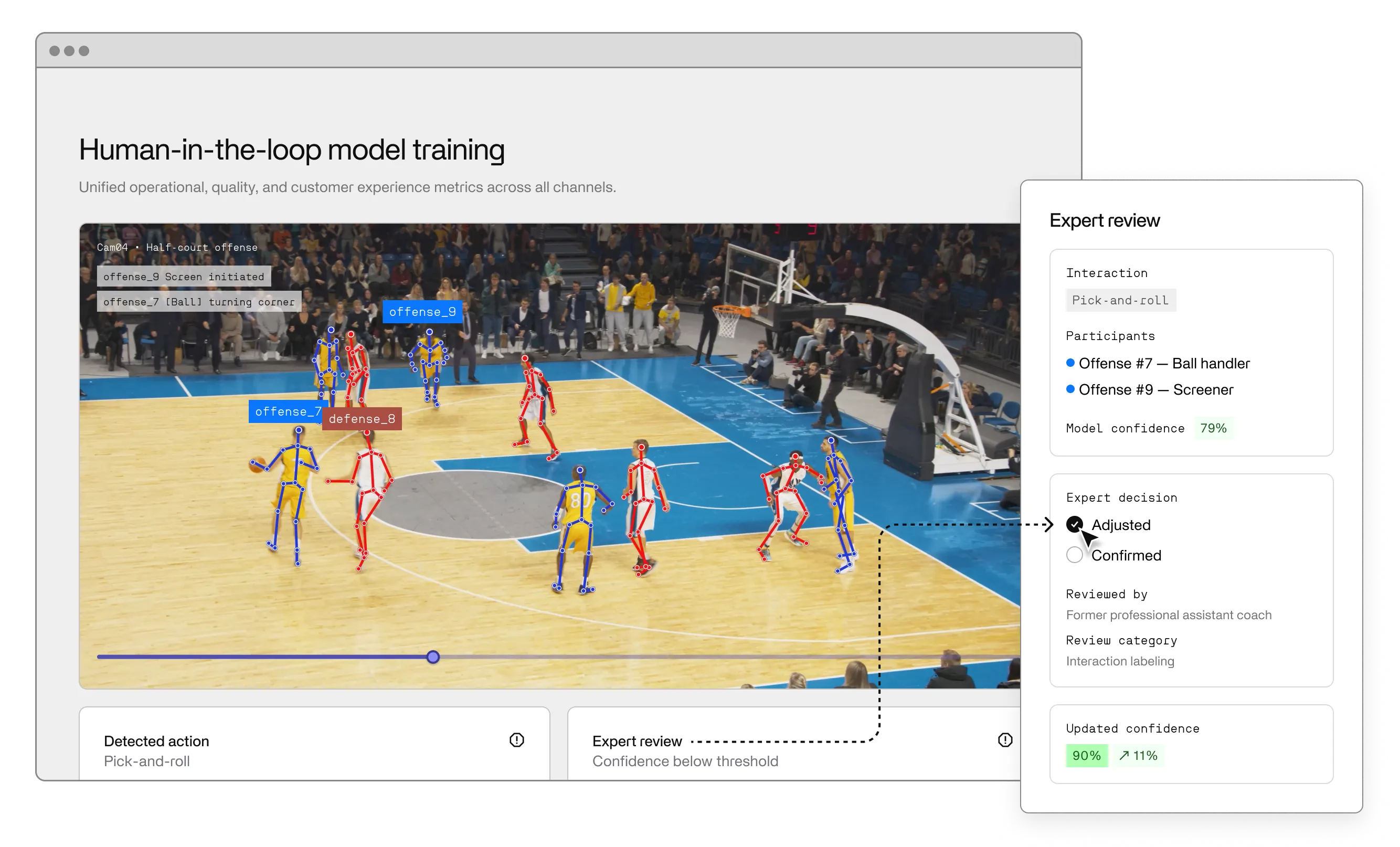

Models are fine-tuned with your data, and continuously evaluated and improved with human-in-the-loop validation.

Built for dynamic, complex environments from the court to the factory floor.

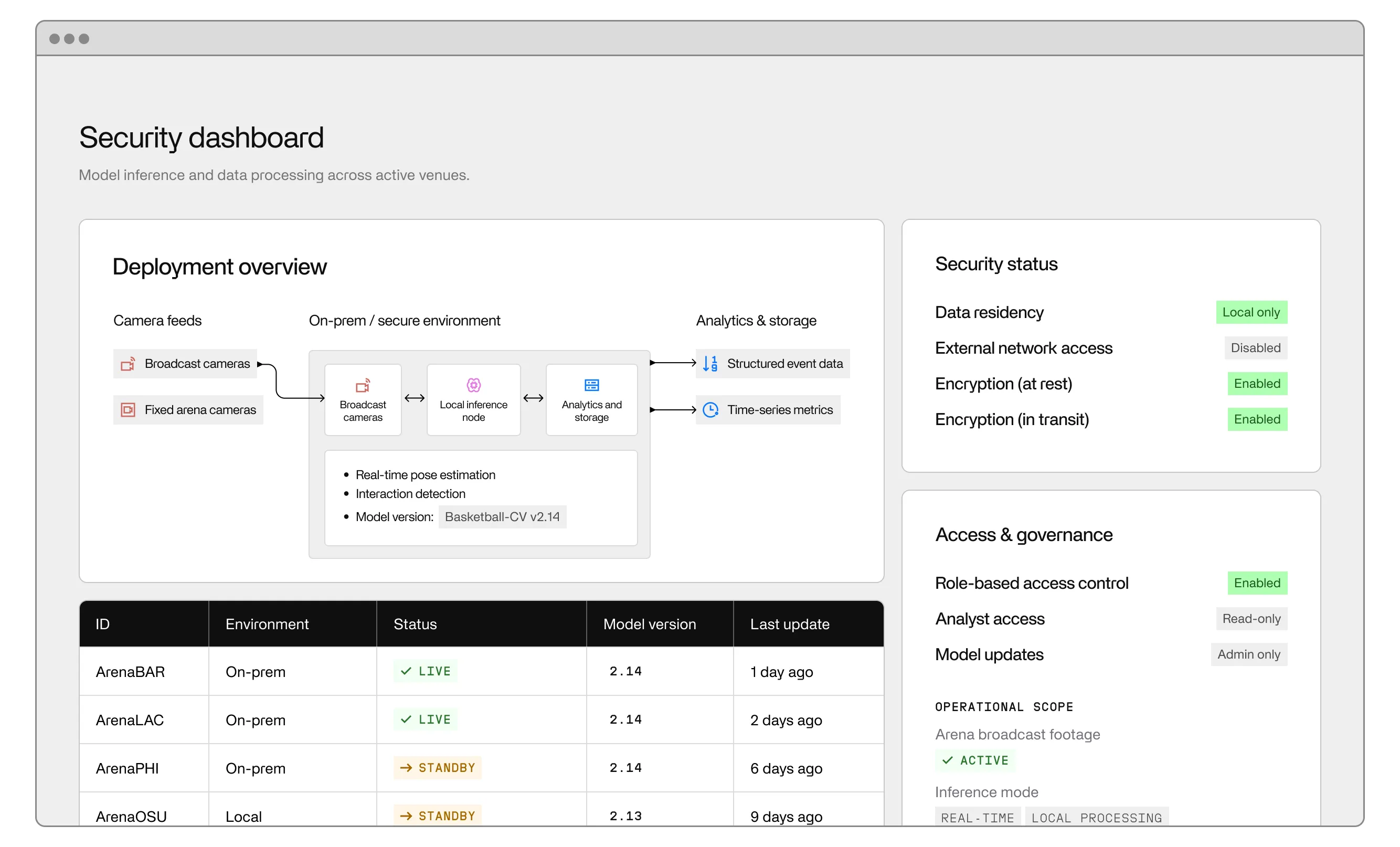

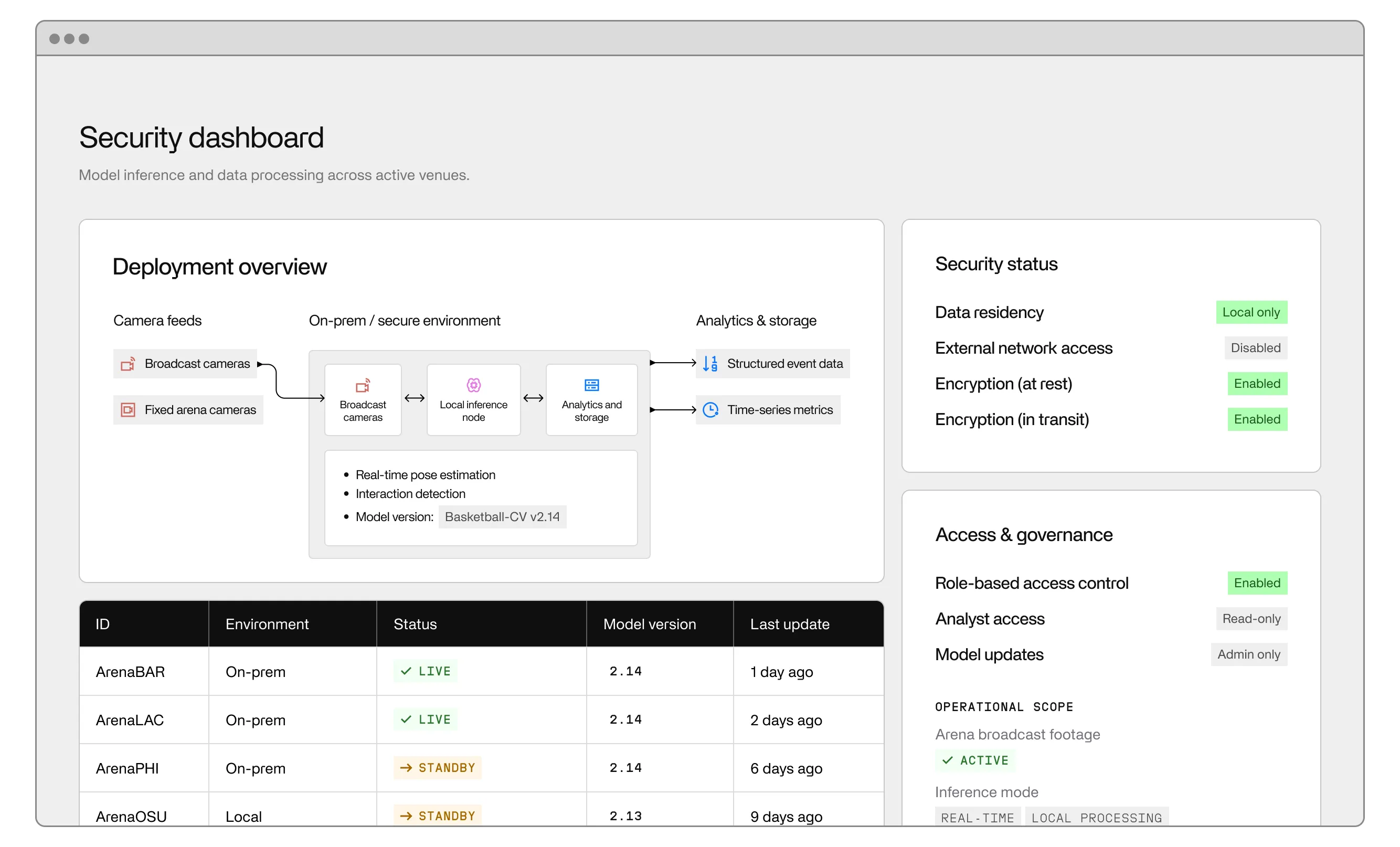

Deploy on-prem or in secure environments. No cloud dependency. No data leaving your control.

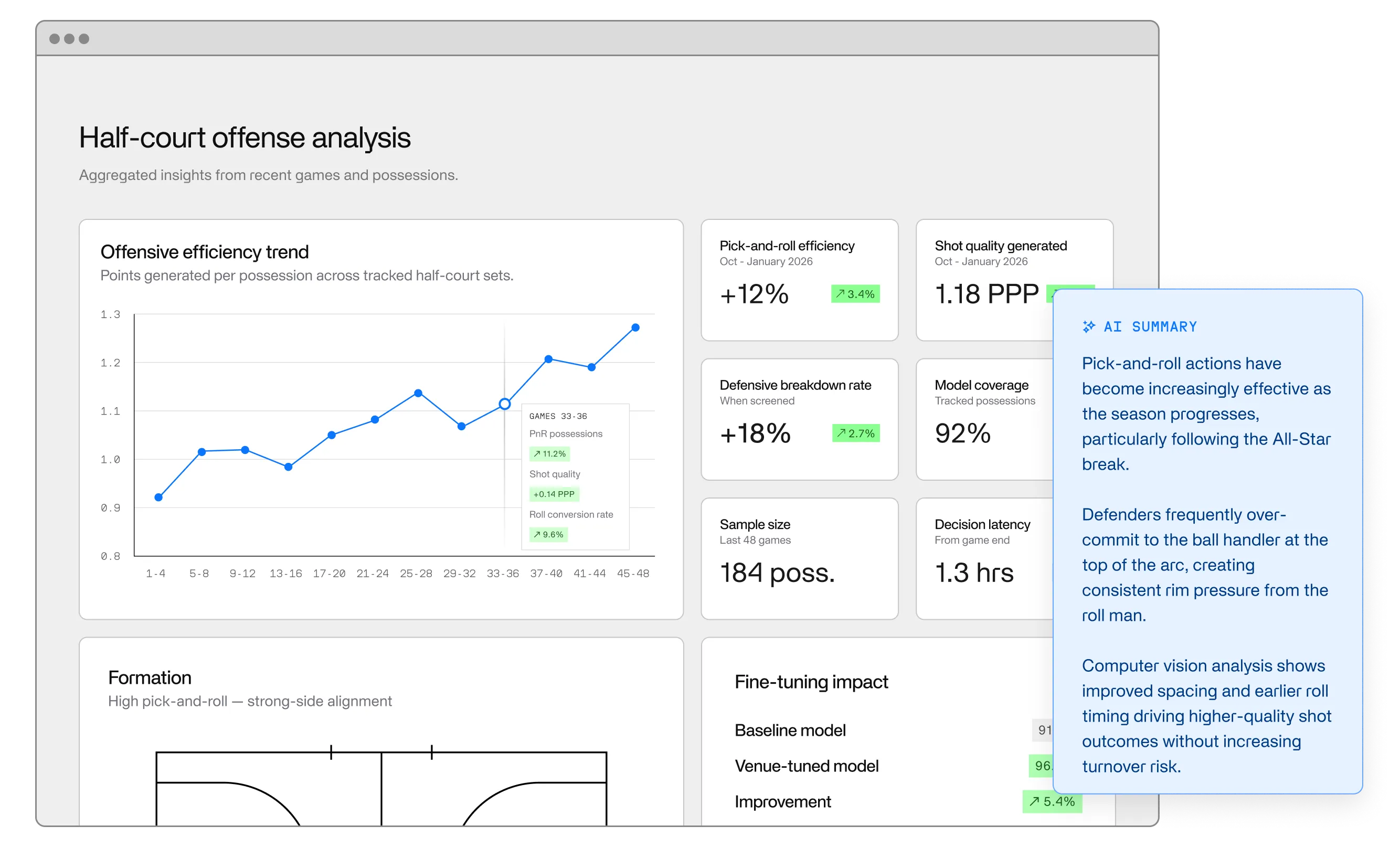

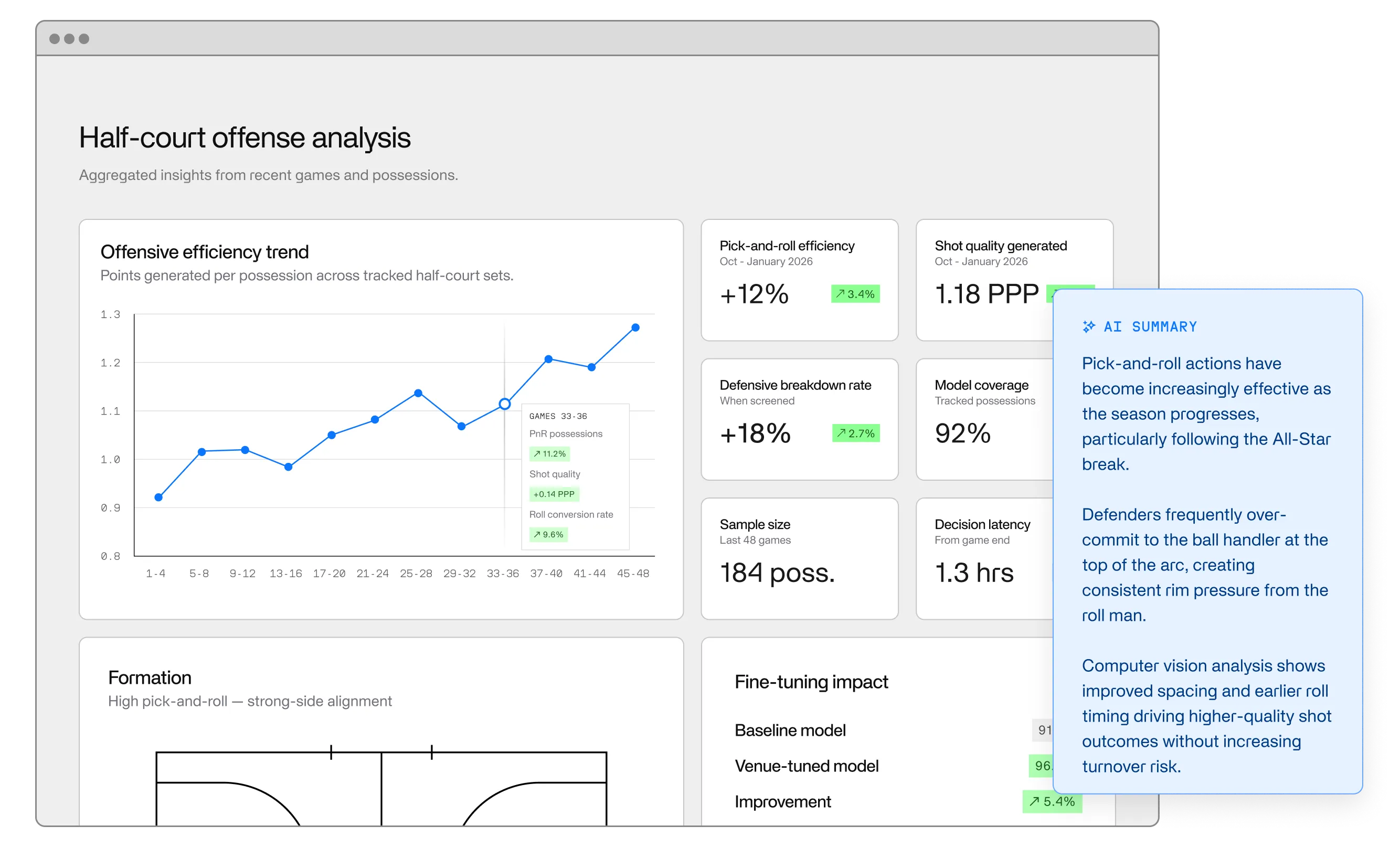

Instead of relying on people to watch and tag hours of footage, computer vision models automatically process streams and clips, extracting events, counts, and behaviors as time-series data. That makes it possible to answer questions like “where are the bottlenecks?”, “how often do safety violations occur?”, or “which layouts drive more engagement?” using dashboards, not manual review.

Yes. Computer vision models can run in secure, on-premise, or edge environments so video never has to leave your controlled infrastructure. You can process streams locally, store only derived signals or anonymized outputs, and apply your own access controls and retention policies to stay aligned with security and privacy requirements.

Computer vision models automatically detect objects, people, and activities in your raw video streams, then convert those detections into structured events and metrics. Instead of scrubbing through footage, teams can query dashboards for counts, patterns, and rule violations tied to specific sites, time windows, or behaviors.

Yes, but you don’t need perfect, cinematic footage. Higher resolution, stable angles, and consistent lighting help models detect and track more accurately, while extremely low resolution, heavy glare, or constant occlusions can limit what AI can reliably see. Many systems can be fine-tuned to your existing camera setup so you get value without replacing every device.

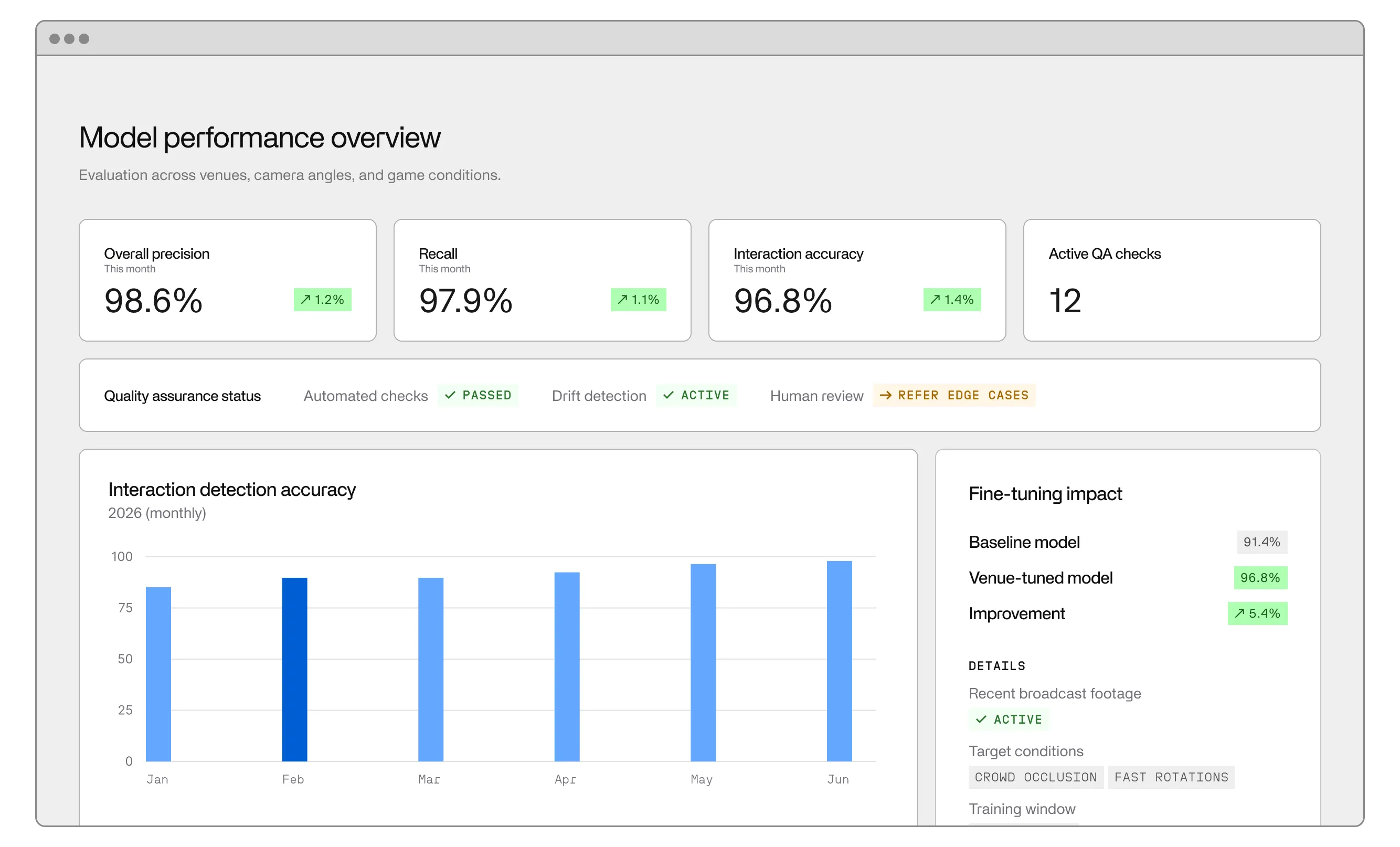

Yes. Modern computer vision platforms are built to process high volumes of live and recorded video by running models continuously and summarizing results as compact event streams. That makes it feasible to monitor thousands of hours of footage across sites without adding a matching number of human reviewers.